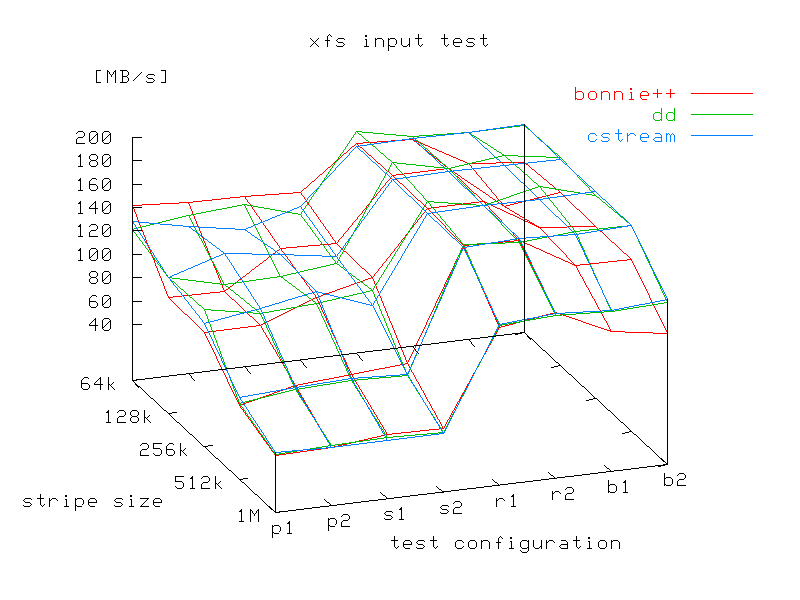

| code | explanation |

|---|---|

| p(lain) | Filesystem was formatted with default parameters. |

| s(tripe) | Filesystem was formatted with -d su=stripesize,sw=8 options (we have 8 disks) |

| r(eadahead) | Like above and kernel min-readahead/max-readahead parameters were raised to 256/512 from the default 3/31. |

| b(dflush) | Like above and kernel bdflush parameters were set to 100 1200 128 512 15 5000 60 20 0 |

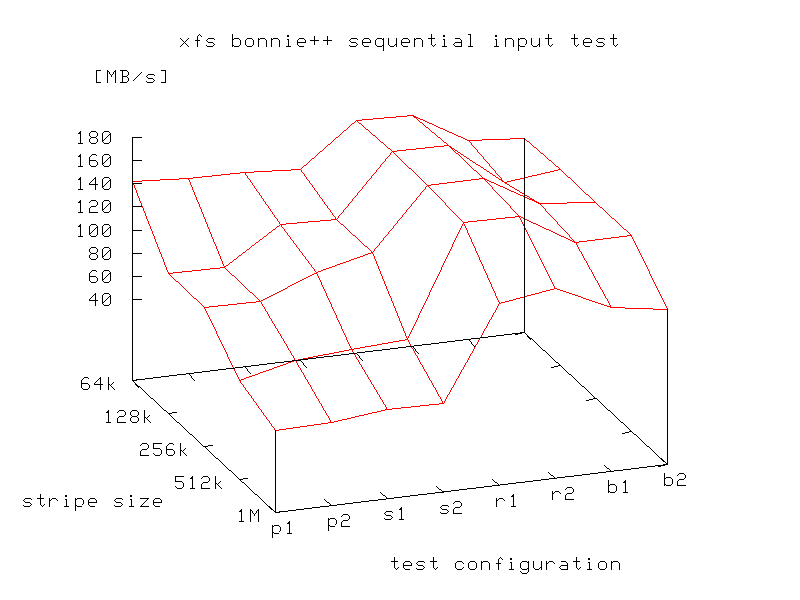

# bonnie++ -u nobody -d . -s 1024 -n 16 -r 64 # bonnie++ -u nobody -d . -s 1024 -n 16Results:

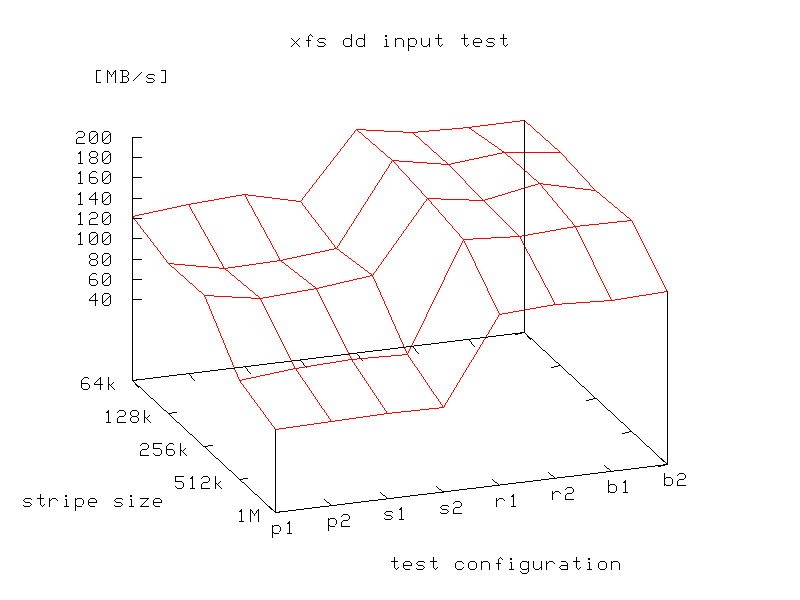

# time sh -c "dd if=/dev/zero bs=64k count=16384 of=jonagy ; sync" # time sh -c "dd if=/dev/zero bs=1024k count=65536 of=jonagy2 ; sync"Results:

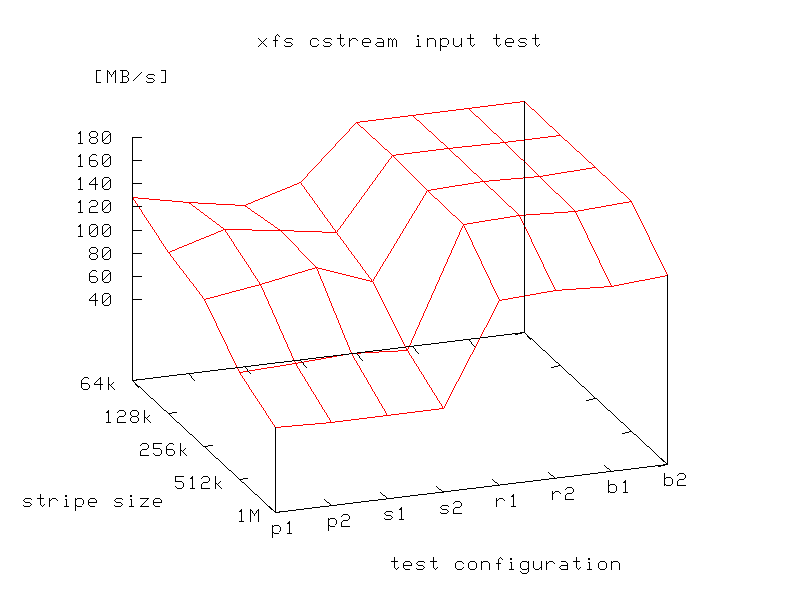

# cstream -B 1m -b 1m -n 1024m -i - -o cgigasz -v 2 # cstream -B 1m -b 1m -n 65536m -i - -o cgigasz2 -v 2Results:

I guess the breakdown of r/b plateau at 512 KB stripe size correlates with the 512 KB max-readahead value. So in the next step I will raise the buffer size to 1MB then I compare input and output speeds.